Abstract

The information communication technology sector will experience huge growth over the coming years, with 29.3 billion devices expected online by 2030, up from 18.4 billion in 2018. To reliably support the online services used by these billions of users, data centres have been built around the world to provide the millions of servers they contain with access to power, cooling and internet connectivity. Whilst the energy consumption of these facilities regularly receives mainstream and academic coverage, analysis of their water consumption is scarce. Data centres consume water directly for cooling, in some cases 57% sourced from potable water, and indirectly through the water requirements of non-renewable electricity generation. Although in the USA, data centre water consumption (1.7 billion litres/day) is small compared to total water consumption (1218 billion litres/day), there are issues of transparency with less than a third of data centre operators measuring water consumption. This paper examines the water consumption of data centres, the measurement of that consumption, highlights the lack of data available to assess water efficiency, and discusses and where the industry is going in attempts to reduce future consumption.

Similar content being viewed by others

Introduction

The information communication technology (ICT) sector is expecting huge growth over the coming years. By 2023, 5.3 billion people will have internet access, up from 3.9 billion in 20151. By then, 29.3 billion devices will be connected to the internet (up from 18.4 billion in 2018), with access speeds doubling between 2018 and 2023 to a global average of 110 Mbps1. More people having faster access to online services means internet traffic will double by 20222.

To reliably serve these billions of users, internet properties rely on millions of dedicated computers called servers. These servers are located in data centres, which provide reliable power, cooling and internet access. Around 40% of servers are in small data centres3 such as cabinets in an office side room, but newer facilities are increasingly “hyperscale” warehouses, hundreds of thousands of square meters in size, and run by the big three cloud vendors (Amazon Web Services, Google Cloud Platform, Microsoft Azure)4.

The energy consumption of data centres regularly receives attention in both the academic and mainstream press. Despite the ICT sector being responsible for some of the largest purchases of renewable energy5, there remains considerable uncertainty about total data centre energy consumption. Estimates for 2018 range from 2006 to 500 TWh7. Some extreme analyses even suggest energy consumption could quadruple by 20308, whereas other estimates show energy growth plateauing6. Regardless of the precise number, data centre energy is an important topic of public interest. However, it is just one aspect of the environmental footprint of ICT. A less well understood factor is water consumption.

Crucial for industry and agriculture, the availability and quality of water is a growing global concern9. Projections suggest that water demand will increase by 55% between 2000 and 2050 due to growth from manufacturing (+400%), thermal power generation (+140%) and domestic use (+130%)10. ICT is another sector contributing to that demand.

In Fiscal Year 2018 (FY18), Google reported 15.8 billion litres of water consumption, up from 11.4 billion litres in FY1711. Similarly with Microsoft who reported using 3.6 billion litres in FY18, up from 1.9 billion litres in FY1712 (Fig. 1). Offices make up some of this total, but data centres also use water.

Amazon does not publish water figures55. At time of writing, Google had not published its environmental report for FY19. Microsoft notes 50% of the change from FY17 to FY18 is from a change in methodology, the other 50% coming from organisational growth.

This paper examines the water consumption of data centres, how that consumption is measured by the ICT sector, and considers where the industry is going in attempts to reduce future water consumption.

Data centre water use

Total water consumption in the USA in 2015 was 1218 billion litres per day, of which thermoelectric power used 503 billion litres, irrigation used 446 billion litres and 147 billion litres per day went to supply 87% of the US population with potable water13.

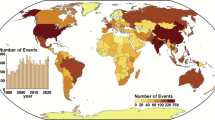

Data centres consume water across two main categories: indirectly through electricity generation (traditionally thermoelectric power) and directly through cooling. In 2014, a total of 626 billion litres of water use was attributable to US data centres4. This is a small proportion in the context of such high national figures, however, data centres compete with other users for access to local resources. A medium-sized data centre (15 megawatts (MW)) uses as much water as three average-sized hospitals, or more than two 18-hole golf courses14. Some progress has been made with using recycled and non-potable water, but from the limited figures available15 some data centre operators are drawing more than half of their water from potable sources (Fig. 2). This has been the source of considerable controversy in areas of water stress and highlights the importance of understanding how data centres use water.

Consumption from potable water was 64% (2017), 65% (2018) and 57% (2019)15.

This section considers these two categories of data centre water consumption.

Water use in electricity generation

Water requirements are measured based on withdrawal or consumption. Consumption refers to water lost (usually through evaporation), whereas water withdrawal refers to water taken from a source such as natural surface water, underground water, reclaimed water or treated potable water, and then later returned to the source16.

Power plants generate heat using fossil fuels such as coal and gas, or nuclear fission, to convert water into steam which rotates a turbine, thereby generating electricity. Water is a key part of this process, which involves pre-treating the source water to remove corroding contaminants, and post-treatment to remove brines. Once heated into steam to rotate the turbine, water is lost through evaporation, discharged as effluent or recirculated; sometimes all three16.

The US average water intensity for electricity generation for 2015 was 2.18 litres per kilowatt hour (L/kWh)17, but fuel and generator technology type have a major impact on cooling water requirements. For example, a dry air cooling system for a natural gas combined cycle generator consumes and withdraws 0.00–0.02 L/kWh, whereas a wet cooling (open recirculating) system for a coal steam turbine consumes 0.53 L/kWh and withdraws 132.5 L/kWh. Efficiency varies significantly, with consumption ranging from 0.00 to 4.4 L/kWh and withdrawal ranging from 0.31 to 533.7 L/kWh depending on the system characteristics16.

Hydropower systems also use large volumes of water despite being considered a cleaner source of electricity. Water evaporation from open reservoirs is a major source of losses, particularly in dry regions and where water is not pumped back into the reservoir or passed onto downstream users. The US national average water consumption for hydropower is 16.8 L/kWh compared to 1.25 L/kWh for thermoelectricity17.

With the majority of generation still from fossil fuels18, the transition to renewables is important for both carbon and water intensity. Only solar and wind energy do not involve water in generation, yet both still consume water in the manufacturing and construction processes9. Estimates suggest that by 2030, moving to wind and solar energy could reduce water withdrawals by 50% in the UK, 25% in the USA, Germany and Australia and 10% in India19.

In the data centre sector, Google and Microsoft are leading the shift to renewables. Between 2010 and 2018, the number of servers increased 6 times, network traffic increased 10 times and storage capacity increased by 25 times, yet energy consumption has only grown by 6%6. A major contributor to this has been the migration to cloud computing, as of 2020 estimated to be a $236 billion market20 and responsible for managing 40% of servers4.

Due to their size, the cloud providers have been able to invest in highly efficient operations. Although often criticised as a metric of efficiency21, an indicator of this can be seen through low power usage effectiveness (PUE) ratios. PUE is a measure of how much of energy input is used by the ICT equipment as opposed to the data centre infrastructure such as cooling22, defined as follows:

PUE is relevant to understanding indirect water consumption because it indicates how efficient a particular facility is at its primary purpose: operating ICT equipment. This includes servers, networking and storage devices. An ideal PUE of 1.0 would mean 100% of the energy going to power useful services running on the ICT equipment rather than wasted on cooling, lighting and power distribution. Water is consumed indirectly through the power generation, so more efficient use of that power means more efficient use of water.

Traditional data centres have reported PUEs reducing from 2.23 in 2010 to 1.93 in 20206. In contrast, the largest “hyperscale” cloud providers report PUEs ranging from 1.25 to 1.18. Some report even better performance, such as Google with a Q2 2020 fleet wide trailing 12-month PUE of 1.1023.

As data centre efficiency reaches such levels, further gains are more difficult. This has already started to show up in plateauing PUE numbers24, which means the expected increase in future usage may soon be unable to be offset by efficiency improvements25. As more equipment is deployed, and more data centres are needed to house that equipment, energy demand will increase. If that energy is not sourced from renewables, indirect water consumption will increase.

Power generation source is therefore a key element in understanding data centre water consumption, with PUE an indicator of how efficiently that power is used, but it is just the first category. Direct water use is also important—all that equipment needs cooling, which in some older facilities can consume up to 30% of total data centre energy demand26,27,28.

Water use in data centre cooling

ICT equipment generates heat and so most devices must have a mechanism to manage their temperature. Drawing cool air over hot metal transfers heat energy to that air, which is then pushed out into the environment. This works because the computer temperature is usually higher than the surrounding air.

The same process occurs in data centres, just at a larger scale. ICT equipment is located within a room or hall, heat is ejected from the equipment via an exhaust and that air is then extracted, cooled and recirculated. Data centre rooms are designed to operate within temperature ranges of 20–22 °C, with a lower bound of 12 °C29. As temperatures increase, equipment failure rates also increase, although not necessarily linearly30.

There are several different mechanisms for data centre cooling27,28, but the general approach involves chillers reducing air temperature by cooling water—typically to 7–10 °C31—which is then used as a heat transfer mechanism. Some data centres use cooling towers where external air travels across a wet media so the water evaporates. Fans expel the hot, wet air and the cooled water is recirculated32. Other data centres use adiabatic economisers where water sprayed directly into the air flow, or onto a heat exchange surface, cools the air entering the data centre33. With both techniques, the evaporation results in water loss. A small 1 MW data centre using one of these types of traditional cooling can use around 25.5 million litres of water per year32.

Cooling the water is the main source of energy consumption. Raising the chiller water temperature from the usual 7–10 °C to 18–20 °C can reduce expenses by 40% due to the reduced temperature difference between the water and the air. Costs depend on the seasonal ambient temperature of the data centre location. In cooler regions, less cooling is required and instead free air cooling can draw in cold air from the external environment31. This also means smaller chillers can be used, reducing capital expenditure by up to 30%31. Both Google34 and Microsoft35 have built data centres without chillers, but this is difficult in hot regions36.

Measuring data centre water use

In the same way that PUE represents data centre energy consumption, water usage effectiveness (WUE) is a metric for data centre water consumption37, defined as follows:

The unit is L/kWh. However, this offers a limited view because it only includes the water consumed on-site. As discussed above, water from electricity generation is also important for understanding the total data centre footprint. A similar metric was published in the academic literature38, but the industry also proposed WUEsource at the same time as WUE37, defined as follows:

The availability of water intensity factors to calculate annual source energy water usage is a limitation of this metric. Some countries publish figures, such as the US National Renewable Energy Laboratory39, but there is large variance across and within technologies40, and they become out of date as the grid decarbonises41.

This may be one reason why less than a third of data centre operators track any water metrics and water conservation is ranked as a low priority32. Facebook is one of the few companies to report WUE, even publishing real-time online dashboards for their Lulea42, Forest City43 and Prineville44 data centres. None of the top three cloud vendors (by usage45) publish water efficiency metrics, although Google11 and Microsoft12 both report total water consumption (Fig. 1).

Cloud vendor water use

The move to cloud computing is a major reason behind claims about flattening data centre energy usage over the last few years6. In the past, organisations had to buy physical equipment and lease space to deploy it in a data centre. With cloud computing, those physical resources still exist but are instead owned and operated by the cloud vendor, and split into virtual units sold by the second, hour or per user request. This means resources can be more efficiently allocated and the cloud vendors can afford to spend money on improving efficiency that would otherwise not be worth the cost to companies operating at smaller scale. However, it also means customers no longer have visibility into the resource consumption behind their purchases, thereby making it impossible to assess the environmental impact of their cloud use46.

Google and Microsoft have started to compete on their sustainability credentials47 and both have been reporting detailed environmental metrics for several years (Fig. 1). However, neither company attributes their water consumption to data centres, and their figures only represent direct water usage, ignoring water used in electricity generation. Breakdowns of water source are absent, which has proven controversial when they compete for stressed potable sources.

Google considers water use to be a trade secret and has engaged in tactics to prevent the release of information about how it works with local utilities48. In South Carolina, Google has free access to pump 1.9 million litres per day due to how state law regulates access to aquifers49. This became controversial with local residents and conservation groups with an application to triple the daily volume50 because at the same time the local utility was asked to reduce its withdrawal by 57% over the following 4 years48. Google has worked on water conservation projects in the past51, and is leading the industry on energy projects such as access to renewable electricity52, so its aggressive approach to water use seems at odds with its overall sustainability strategy.

Amazon plans to be carbon neutral by 204053 (Google and Microsoft achieved this in 2007 and 2012, respectively) and has lagged behind on when it plans to match all its electricity consumption with 100% renewables (by 202554). Amazon’s ecommerce and logistics business mean it has a much larger environmental footprint, which is not directly comparable with Google or Microsoft, but it could still break out figures for its Amazon Web Services cloud business. Instead it offers only vague statements about its water consumption55. Meanwhile, Microsoft announced its intention to replenish more water than it consumes by 203056. Amazon stands out for its lack of transparency.

Alternative water sources

Where data centres own and operate the entire facility, there is more flexibility for exploring alternative sources of water, and different techniques for keeping ICT equipment cool.

Google’s Hamina data centre in Finland has used sea water for cooling since it opened in 201157. Using existing pipes from when the facility was a paper mill, the cold sea water is pumped into heat exchangers within the data centre. The sea water is kept separate from the freshwater, which circulates within the heat exchangers. When expelled, the hot water is mixed with cold sea water before being returned to the sea58.

Despite Amazon’s poor environmental efforts in comparison to Google and Microsoft, they are expanding their use of non-potable water55. Data centre operators have a history of using drinking water for cooling, and most source their water from reservoirs because access to rainfall, grey water and surface water is seen as unreliable32. Digital Realty, a large global data centre operator, is one of the few companies publishing a water source breakdown (Fig. 2). Reducing this proportion is important because the processing and filtering requirements of drinking water increase the lifecycle energy footprint. The embodied energy in the manufacturing of any chemicals required for filtering must also be considered. This increases the overall carbon footprint of a data centre.

Amazon claims to be the first data centre operator approved for using recycled water for direct evaporative cooling. Deployed in their data centres in Northern Virginia and Oregon, they also have plans to retrofit facilities in Northern California55. However, Digital Realty faced delays when working with a local utility in Los Angeles because they needed a new pipeline to pump recycled water to its data centres14.

Microsoft’s Project Natick is a different attempt to tackle this challenge by submerging a sealed data centre under water. Tests concluded off the Orkney Islands in 2020 showed that 864 servers could run reliably for 2 years with cooling provided by the ambient sea temperature, and electricity from local renewable sources59. The potential to make use of natural cooling is encouraging, however, the small scale of these systems could mean higher costs, making them appropriate only for certain high-value use cases.

ICT equipment is deployed in racks, aligned in rows, within a data centre room. Traditional cooling manages the temperature of the room as a whole, however, this is not as efficient as more targeted cooling. Moving from cooling the entire room to focused cooling of a row of servers, or even a specific rack, can achieve energy savings of up to 29%60, and is the subject of a Google patent granted in 201261.

This is becoming necessary because of the increase in rack density. Microsoft is deploying new hardware such as the Nvidia DGX-2 Graphics Processing Unit that consumes 10 kW for machine learning workloads, and existing cooling techniques are proving insufficient62. Using low-boiling-point liquids is more efficient than using ambient air cooling63 and past experiments have shown that a super-computing system can transfer 96% of excess heat to water64, with 45% less heat transferred to the ambient air65. Microsoft is now testing these techniques in its cloud data centres62.

These projects show promise for the future, but there are still gains to be had from existing infrastructure. Google has used its AI expertise to reduce energy use from cooling by up to 40% through hourly adjustments to environmental controls based on predicted weather, internal temperatures and pressure within its existing data centres66,67. Another idea is to co-locate data centres and desalination facilities so they can share energy intensive operations68. That most of the innovation is now led by the big three cloud providers demonstrates their scale advantage. By owning, managing and controlling the entire value chain from server design through to the location of the building, cloud vendors have been able to push data centre efficiency to levels impossible for more traditional operators to achieve.

However, only the largest providers build their own data centres, and often work with other data centre operators in smaller regions. For example, as of the end of 2020, Google lists 21 data centres69, publishes PUE for 1723, but has over 100 points of presence (PoPs) around the world70. These PoPs are used to provide services closer to its users, for example, to provide faster load times when streaming YouTube videos71. Whilst Google owns the equipment deployed in the PoP, it does not have the same level of control as it does when it designs and builds its own data centres. Even so, Google has explored efficiency improvements such as optimising air venting, increasing temperature from 22 to 27 °C, deployed plastic curtains to establish cool aisles for more heat sensitive equipment and improved the design of air conditioning return air flow. In a case study for one its PoPs, this work was shown to reduce PUE from 2.4 to 1.7 and saved US$67,000 per year in energy for a cost of US$25,00072.

Conclusions

Data centre water efficiency deserves greater attention. Annual reports show water consumption for cooling directly paid for by the operator, so there is an economic incentive to increase efficiency. As the total energy share of cooling has fallen with improving PUEs, the focus has been on electricity consumption, and so water has been a low priority for the industry. However, the largest contributor to the water footprint of a data centre is electricity generation. Without metrics like WUEsource this is invisible.

Moving to the cloud is more efficient than running physical infrastructure in owned or leased facilities, but transparency remains a major issue73. Microsoft and Google both produce detailed environmental reports, but only in aggregate. The largest cloud provider, Amazon, publishes almost nothing at all. Facebook is an exception, but as they do not sell cloud services, third parties are unable to take advantage of their infrastructure in the same way they can buy computing services from Amazon, Google or Microsoft.

With energy consumption and carbon emissions this is starting to change. Google and Microsoft publish their Greenhouse Gas emissions and Microsoft has developed a Sustainability Calculator so customers can calculate the emissions associated with their cloud resources74. Part of the reason behind this is likely to be pressure from customers due to legal reporting requirements now imposed on the largest public companies75,76. However, there are no such requirements for water.

Google and Microsoft are keen to publicise their renewable energy projects, offset purchases, renewables matching and energy efficiency improvements. Both companies publish water consumption figures (Microsoft also includes withdrawal) but the same level of detail provided about energy projects is lacking for water. This is an opportunity for data centre operators to publish more than just their PUE ratios, a metric which was never intended to compare efficiency between facilities77 yet has become standard practice to report78.

The industry has already taken the first step by defining water metrics. Now they need to use them. Operators and cloud vendors should calculate and publish WUEsource alongside PUE. A breakdown of water source, such as the one published by Digital Realty15, is also important. Using potable water diverts valuable resources away from the local community, a situation which is likely to get worse as water scarcity becomes more of a problem. This would improve transparency and move the industry from thinking mainly about use-stage energy to considering the complete lifecycle environmental impacts of their business.

Once metrics are introduced internally and released publicly, data centre operators can work to improve. This starts with buying renewable energy so that the water intensity of the energy is as low as possible. Free cooling should be preferred but where water is necessary for cooling, recycled and non-potable water must make up the majority of withdrawals. Direct liquid, rack or room cooling technologies offer greatest efficiency but require the ICT equipment owner to also own, or closely work with the data centre.

Corporate water stewardship is growing in importance79, yet it is difficult to understand the current situation due to the lack of reporting. Google and Microsoft are leading in renewable energy, but even they are secretive about their water resource management. It is easy to criticise Amazon’s lack of transparency but they are not alone—the entire data centre industry suffers from a lack of transparency. However, they will only report data when their customers ask for it. Organisations who lease space in data centres must take responsibility by asking not just for PUE metrics, but also to ask about water consumption, and make both part of their vendor selection criteria. Considering how much data is stored in data centres, it is ironic how little data there is available about how they operate.

Change history

02 November 2021

A Correction to this paper has been published: https://doi.org/10.1038/s41545-021-00140-3

References

Cisco. Cisco annual internet report (2018-2023) white paper. https://www.cisco.com/c/en/us/solutions/collateral/executive-perspectives/annual-internet-report/white-paper-c11-741490.html (2020).

Kamiya, G. Data Centres and Data Transmission Networks—Analysis. https://www.iea.org/reports/data-centres-and-data-transmission-networks (2020).

Ganeshalingam, M., Shehabi, A. & Desroches, L.-B. Shining a light on small data centers in the U.S. Tech. Rep. LBNL-2001025, Lawrence Berkeley National Lab, Berkeley. https://escholarship.org/uc/item/8dh8j3kq (2017).

Shehabi, A. et al. United States Data Center Energy Usage Report. Tech. Rep. LBNL-1005775, Lawrence Berkeley National Laboratory, California. http://www.osti.gov/servlets/purl/1372902/ (2016).

United States Environmental Protection Agency. Green power partnership national top 100. https://www.epa.gov/greenpower/green-power-partnership-national-top-100 (2020).

Masanet, E., Shehabi, A., Lei, N., Smith, S. & Koomey, J. Recalibrating global data center energy-use estimates. Science 367, 984–986 (2020).

Bashroush, R. & Lawrence, A. Beyond PUE: tackling IT’s wasted terawatts. Tech. Rep. UII-34, Uptime Institute. https://uptimeinstitute.com/beyond-pue-tackling-it%E2%80%99s-wasted-terawatts (2020).

Andrae, A. S. G. & Edler, T. On global electricity usage of communication technology: trends to 2030. Challenges 6, 117–157 (2015).

UNESCO. The United Nations world water development report 2020: water and climate chang (UNESCO, 2020). https://unesdoc.unesco.org/ark:/48223/pf0000372985.locale=en.

OECD. OECD Environmental Outlook to 205 (OECD Publishing, 2012). https://doi.org/10.1787/9789264122246-en.

Google. Environmental Report 2019. Tech. Rep., Google. https://services.google.com/fh/files/misc/google_2019-environmental-report.pdf (2020).

Microsoft. 2019 data factsheet: environmental indicators. Tech. Rep., Microsoft. http://query.prod.cms.rt.microsoft.com/cms/api/am/binary/RE3455q (2020).

Dieter, C. A. et al. Estimated use of water in the United States in 2015. Report 1441, US Geological Survey, Reston, VA. https://doi.org/10.3133/cir1441 (2018).

FitzGerald, D. Data centers and hidden water use. Wall Street Journal. https://www.wsj.com/articles/data-centers-1435168386 (2015).

Realty, D. Environmental performance. https://www.digitalrealty.com/environmental-social-and-governance-report-2019-highlights/environmental-performance (2019).

Pan, S.-Y., Snyder, S. W., Packman, A. I., Lin, Y. J. & Chiang, P.-C. Cooling water use in thermoelectric power generation and its associated challenges for addressing water-energy nexus. Water-Energy Nexus 1, 26–41 (2018).

Lee, U., Han, J., Elgowainy, A. & Wang, M. Regional water consumption for hydro and thermal electricity generation in the United States. Appl. Energy 210, 661–672 (2018).

IEA. Electricity generation by fuel and scenario, 2018-2040. https://www.iea.org/data-and-statistics/charts/electricity-generation-by-fuel-and-scenario-2018-2040 (2019).

IRENA. Renewable Energy in the Water, Energy and Food Nexus. Tech. Rep., IRENA. https://www.irena.org/-/media/Files/IRENA/Agency/Publication/2015/IRENA_Water_Energy_Food_Nexus_2015.pdf (2015).

Adams, J. & Cser, A. Forrester data: cloud security solutions forecast, 2016 To 2021 (global). Tech. Rep., Forrester. https://www.tatacommunications.com/wp-content/uploads/2019/02/Forrester-Report.pdf (2017).

Brady, G. A., Kapur, N., Summers, J. L. & Thompson, H. M. A case study and critical assessment in calculating power usage effectiveness for a data centre. Energy Convers. Manag. 76, 155–161 (2013).

ISO. ISO/IEC 30134-2:2016. https://www.iso.org/standard/63451.html (2016).

Google. Efficiency—data centers. https://www.google.com/about/datacenters/efficiency/ (2020).

Lawrence, A. Data center PUEs flat since 2013. https://journal.uptimeinstitute.com/data-center-pues-flat-since-2013/ (2020).

Shehabi, A., Smith, S. J., Masanet, E. & Koomey, J. Data center growth in the United States: decoupling the demand for services from electricity use. Environ. Res. Lett. 13, 124030 (2018).

Iyengar, M., Schmidt, R. & Caricari, J. Reducing energy usage in data centers through control of room air conditioning units. In 2010 12th IEEE Intersociety Conference on Thermal and Thermomechanical Phenomena in Electronic Systems, 1–11 (IEEE, Las Vegas, NV, 2010).

Ebrahimi, K., Jones, G. F. & Fleischer, A. S. A review of data center cooling technology, operating conditions and the corresponding low-grade waste heat recovery opportunities. Renew. Sustain. Energy Rev. 31, 622–638 (2014).

Capozzoli, A. & Primiceri, G. Cooling systems in data centers: state of art and emerging technologies. Energy Procedia 83, 484–493 (2015).

Miller, C. Energy efficiency guide: data center temperature. https://www.datacenterknowledge.com/archives/2011/03/10/energy-efficiency-guide-data-center-temperature (2011).

Miller, R. Intel: servers do fine with outside air. https://www.datacenterknowledge.com/archives/2008/09/18/intel-servers-do-fine-with-outside-air (2008).

Frizziero, M. Rethinking chilled water temps bring big savings in data center cooling. https://blog.se.com/datacenter/2016/08/17/water-temperatures-data-center-cooling/ (2016).

Heslin, K. Ignore data center water consumption at your own peril. https://journal.uptimeinstitute.com/dont-ignore-water-consumption/ (2016).

Frizziero, M. Why water use is a key consideration when cooling your data center. https://blog.se.com/datacenter/2018/05/10/why-water-use-consideration-cooling-data-center/ (2018).

Miller, R. Google’s chiller-less data center. https://www.datacenterknowledge.com/archives/2009/07/15/googles-chiller-less-data-center (2009).

Miller, R. Microsoft’s chiller-less data center. https://www.datacenterknowledge.com/archives/2009/09/24/microsofts-chiller-less-data-center (2009).

David, M. P. et al. Experimental characterization of an energy efficient chiller-less data center test facility with warm water cooled servers. In 2012 28th Annual IEEE Semiconductor Thermal Measurement and Management Symposium (SEMI-THERM) 232–237 (IEEE, San Jose, CA, 2012).

Patterson, M. Water usage effectiveness (WUE): a green grid data center sustainability metric. Tech. Rep. WP35, The Green Grid. https://www.thegreengrid.org/en/resources/library-and-tools/238-Water-Usage-Effectiveness-(WUE):-A-Green-Grid-Data-Center-Sustainability-Metric- (2011).

Sharma, R., Shah, A., Bash, C., Christian, T. & Patel, C. Water efficiency management in datacenters: metrics and methodology. In 2009 IEEE International Symposium on Sustainable Systems and Technology 1–6 (IEEE, Tempe, AZ, 2009).

Torcellini, P., Long, N. & Judkoff, R. Consumptive water use for U.S. Power Production. Tech. Rep. NREL/TP-550-33905, 15005918, National Renewable Energy Laboratory. http://www.osti.gov/servlets/purl/15005918/ (2003).

Macknick, J., Newmark, R., Heath, G. & Hallett, K. C. Operational water consumption and withdrawal factors for electricity generating technologies: a review of existing literature. Environ. Res. Lett. 7, 045802 (2012).

Peck, J. J. & Smith, A. D. Quantification and regional comparison of water use for power generation: a California ISO case study. Energy Rep. 3, 22–28 (2017).

Facebook. Lulea Data Center. https://www.facebook.com/LuleaDataCenter/app/115276998849912 (2020).

Facebook. Forest City Data Center. https://www.facebook.com/ForestCityDataCenter/app/288655784601722 (2020).

Facebook. Prineville Data Center. https://www.facebook.com/PrinevilleDataCenter/app/399244020173259 (2020).

Flexera. RightScale 2019 State of the Cloud Report. Tech. Rep., Flexera, Inc. https://resources.flexera.com/web/media/documents/rightscale-2019-state-of-the-cloud-report-from-flexera.pdf (2019).

Mytton, D. Assessing the suitability of the Greenhouse Gas Protocol for calculation of emissions from public cloud computing workloads. J. Cloud Comput. 9, 45 (2020).

Mytton, D. Fighting over who has the greenest public cloud. https://davidmytton.blog/fighting-over-who-has-the-greenest-public-cloud/ (2020).

Sattiraju, N. Google data centers’ secret cost: billions of gallons of water. Bloomberg.com. https://www.bloomberg.com/news/features/2020-04-01/how-much-water-do-google-data-centers-use-billions-of-gallons (2020).

Petersen, B. Google’s controversial groundwater withdrawal sparks question of who owns South Carolina water. https://www.postandcourier.com/news/googles-controversial-groundwater-withdrawal-sparks-question-of-who-owns-south-carolina-water/article_bed9179c-1baa-11e7-983e-03d6b33a01e7.html (2017).

McCammon, S. Google moves in and wants to pump 1.5 million gallons of water per day. https://www.npr.org/2017/05/08/527214026/google-moves-in-and-wants-to-pump-1-5-million-gallons-of-water-per-day (2017).

Brown, J. Helping the Hooch with water conservation at our Douglas County data center. https://green.googleblog.com/2012/03/helping-hooch-with-water-conservation.html (2012).

Pichai, S. Our third decade of climate action: Realizing a carbon-free future. https://blog.google/outreach-initiatives/sustainability/our-third-decade-climate-action-realizing-carbon-free-future/ (2020).

Amazon. Amazon co-founds the climate pledge, setting goal to meet the Paris Agreement 10 years early. https://press.aboutamazon.com/news-releases/news-release-details/amazon-co-founds-climate-pledge-setting-goal-meet-paris/ (2019).

Amazon. Amazon announces $2 billion climate pledge fund to invest in companies building products, services, and technologies to decarbonize the economy and protect the planet. https://press.aboutamazon.com/news-releases/news-release-details/amazon-announces-2-billion-climate-pledge-fund-invest-companies/ (2020).

Amazon. Reducing water in data centers. https://sustainability.aboutamazon.com/environment/the-cloud/data-centers (2019).

Smith, B. Microsoft will replenish more water than it consumes by 2030. https://blogs.microsoft.com/blog/2020/09/21/microsoft-will-replenish-more-water-than-it-consumes-by-2030/ (2020).

Judge, P. Google to invest $670m to build a second data center in Hamina, Finland. https://wwwgraphicx.sty.datacenterdynamics.com/en/news/google-invest-670m-to-build-second-facility-hamina-finland-/ (2019).

Levy, S. Where servers meet saunas: a visit to Google’s Finland data center. Wired. https://www.wired.com/2012/10/google-finland-data-center-2/ (2012).

Roach, J. Microsoft finds underwater datacenters are reliable, practical and use energy sustainably. https://news.microsoft.com/innovation-stories/project-natick-underwater-datacenter/ (2020).

Moazamigoodarzi, H., Tsai, P. J., Pal, S., Ghosh, S. & Puri, I. K. Influence of cooling architecture on data center power consumption. Energy 183, 525–535 (2019).

Hamburgen, W. et al. Modular data center cooling. https://patents.google.com/patent/US8320125B1/en (2012).

Russinovich, M. Inside Azure datacenter architecture with Mark Russinovich ∣ BRK3097. https://www.youtube.com/watch?v=X-0V6bYfTpA&feature=youtu.be&t=1390 (2020).

Meijer, G. I. Cooling energy-hungry data centers. Science 328, 318–319 (2010).

Ellsworth, M. J. Jr., Goth, G. F., Zoodsma, R. J., Arvelo, A., Campbell, L. A. & Anderl, W. J. An Overview of the IBM Power 775 Supercomputer Water Cooling System. ASME. J. Electron. Packag. 134(2), 020906, https://doi.org/10.1115/1.4006140 (2012).

Ellsworth, M. J. & Iyengar, M. K. Energy efficiency analyses and comparison of air and water cooled high performance servers. In ASME 2009 InterPACK Conference 907–914 (American Society of Mechanical Engineers Digital Collection, 2010).

Evans, R. & Gao, J. DeepMind AI reduces Google data centre cooling bill by 40%. https://deepmind.com/blog/article/deepmind-ai-reduces-google-data-centre-cooling-bill-40 (2016).

Gao, J. Machine learning applications for data center optimization. Tech. Rep., Google. https://static.googleusercontent.com/media/research.google.com/en//pubs/archive/42542.pdf (2017).

Hamilton, J. Data center cooling done differently—perspectives. https://perspectives.mvdirona.com/2014/08/data-center-cooling-done-differently/ (2014).

Google. Google data center locations. https://www.google.com/about/datacenters/locations/ (2020).

Google. Google edge network infrastructure. https://peering.google.com/#/infrastructure (2020).

Loh, F. et al. From click to playback: a dataset to study the response time of mobile YouTube. In Proceedings of the 10th ACM Multimedia Systems Conference MMSys 19, 267–272 (Association for Computing Machinery, New York, NY, 2019).

Google. Google’s green data centers: network POP case study. https://static.googleusercontent.com/media/www.google.com/en//corporate/datacenter/dc-best-practices-google.pdf (2011).

Mytton, D. Hiding greenhouse gas emissions in the cloud. Nat. Clim. Change 10, 701 (2020).

Joppa, L. Progress on our goal to be carbon negative by 2030. https://blogs.microsoft.com/on-the-issues/2020/07/21/carbon-negative-transform-to-net-zero/ (2020).

European Commission. EU taxonomy for sustainable activities. https://ec.europa.eu/info/business-economy-euro/banking-and-finance/sustainable-finance/eu-taxonomy-sustainable-activities_en (2020).

Government UK. Streamlined energy and carbon reporting. https://www.gov.uk/government/consultations/streamlined-energy-and-carbon-reporting (2018).

van de Voort, T., Zavrel, V., Torrens Galdiz, I. & Hensen, J. Analysis of performance metrics for data center efficiency - should the power utilization effectiveness PUE still be used as the main indicator? (Part 1). REHVA J. 01/2017, 5–11 (2017).

Zoie, R. C., Mihaela, R. D. & Alexandru, S. An analysis of the power usage effectiveness metric in data centers. In 2017 5th International Symposium on Electrical and Electronics Engineering (ISEEE) 1–6 (IEEE, 2017).

Yu, H.-C., Kuo, L. & Ma, B. The drivers of corporate water disclosure in enhancing information transparency. Sustainability 12, 385 (2020).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

D.M. has a financial interest in StackPath, LLC, an edge computing company, and was engaged by Uptime Institute as a Research Affiliate from December 2020.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mytton, D. Data centre water consumption. npj Clean Water 4, 11 (2021). https://doi.org/10.1038/s41545-021-00101-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41545-021-00101-w

This article is cited by

-

The dark side of Earth observation

Nature Sustainability (2024)

-

The mechanisms of AI hype and its planetary and social costs

AI and Ethics (2024)

-

Insights from a comparative GIS-MCDA groundwater vulnerability assessment in a granitic and metasedimentary fractured rock media

Discover Water (2023)

-

Networked computing systems for bio-diversity and environmental preservation

Scientific Reports (2022)

-

Heat Wave Resilient Systems Architecture for Underwater Data Centers

Scientific Reports (2022)